Brain Computer Interface

Scientific Initiation Project

Introduction

What if machines could understand us—not by what we say or touch, but by what our muscles whisper before we even move? The field of Brain-Computer Interfaces (BCIs) is turning this question into systems—and this project stands at the frontier of that transformation.

Human-Computer Interaction (HCI) has evolved from tools we manipulate to environments that respond. It began with keyboards, mice, and graphic displays, but has now reached a point where signals generated by the human body itself can form the basis of communication. This project explores that shift—developing a non-invasive BCI architecture based on electromyographic (EMG) signals from the forearm, using the Myo Armband as a biological input device.

These signals, processed and interpreted in real time, are mapped to actionable commands capable of controlling external devices. The system proposes a different kind of interface—one that emerges from intention rather than interaction, and places the body at the center of the system.

Rooted in the scientific foundations of action potentials, motor unit activation, and bioelectrical propagation, this research reframes EMG not as noise to be filtered, but as structured information that can be interpreted, translated, and integrated into intelligent systems. What begins as a muscle impulse becomes a communicative bridge between user and machine.

This project contributes a working prototype and scalable system model that demonstrates how biological signals can substitute mechanical inputs. Its application goes beyond academic exploration—it points toward a future of real-time, bio-adaptive control systems that serve diverse users and contexts.

In this paradigm, interaction becomes more than functional—it becomes inclusive. For individuals with reduced mobility or physical impairments, traditional interfaces are not only inconvenient; they are barriers. By enabling interaction through internal signals, this project proposes an alternative: technology that adapts to the user, not the other way around.

It is also grounded in a personal conviction—that technology should be an instrument of autonomy, not dependency. It should elevate human ability, not design around its absence.

Inspired by ongoing conversations around ethical design and inclusive systems, this work contributes to a future where devices don’t just respond—they understand. Where control begins not with contact, but with consciousness.

Development

One of the most promising directions in interaction design today lies in the development of gesture-based systems—technologies that allow users to communicate with machines through movement, intention, and biometric signals, without relying on conventional input methods. This project advances that vision by implementing a wearable system capable of capturing neuromuscular activity and translating it into functional, real-time commands through a Brain-Computer Interface.

At the core of the system is the Myo Armband, a wearable device that captures the electrical activity of the forearm muscles using an array of eight electromyography (EMG) sensors, combined with a gyroscope, accelerometer, and magnetometer. These sensors allow the device to interpret muscle contractions and arm movements with a high degree of precision. From a technical standpoint, Myo serves not only as a sensor platform but also as a bridge between human intention and machine behavior.

The system architecture integrates three primary components: the Myo armband for signal acquisition, a custom Android application for real-time data interpretation and command logic (developed using the Myo SDK), and an Arduino-based hardware module that executes actions based on received input. These elements communicate via Bluetooth, enabling the seamless transmission of gesture commands to physical devices—such as lights or mechanical actuators—without latency or need for physical contact.

This setup offers more than engineering novelty. By combining low-cost, open-source tools with real-time EMG signal processing, the system presents a practical, scalable model for inclusive neurotechnology. It transforms traditional limitations—price, complexity, and accessibility—into opportunities for broader social application.

The use of a mobile platform as the intermediary layer enhances mobility and usability. In a world where smartphones are nearly ubiquitous, integrating BCI control into a mobile environment ensures broader reach, especially for users in under-resourced settings. Moreover, it allows for greater flexibility in expanding functionality—whether for smart homes, prosthetics, or immersive interfaces.

At its core, this system demonstrates that the human body itself can become the interface—capable of controlling the environment through intention rather than touch. It reframes muscle activity not as background noise, but as a meaningful channel for communication and autonomy.

And beyond the interface, this is a model of ethical systems design. It embraces a principle too often overlooked in emerging technology: that innovation must begin with people. By using the body's own signals as the foundation for control, this system invites a future in which accessibility is not an afterthought, but a design principle from the start.

Project

Myo is a device that presents much of its power through the transformation of an interaction with the user, using signal processing techniques to create a BCI interaction. Undoubtedly, the way in which this transformation occurs is intriguing, as simple as it may seem, this interaction is extremely complex, requiring a large number of techniques to perform such a procedure.

As much as the ways in which individuals perform the same gesture, for example a fist, under a microscopic gaze seem identical, there is more complexity and variety than a human eye can look at when repairing a gesture. To reduce the impacts of signal behavior, scientists use a large amount of collected electromyographic data in order to maintain the algorithm used to identify a gesture and be able to minimize differences in the interpretation of the EMG.

This project started by doing something simple in response to a pose, in order to demonstrate the ability of the BCI interaction provided by Myo. For that, a prototype was built through the Arduino platform, to light up a led corresponding to a gesture captured by Myo. As simple as this process may seem, it is of great importance for automation because it demonstrates knowledge of the entire process, and new features could be obtained in the future.

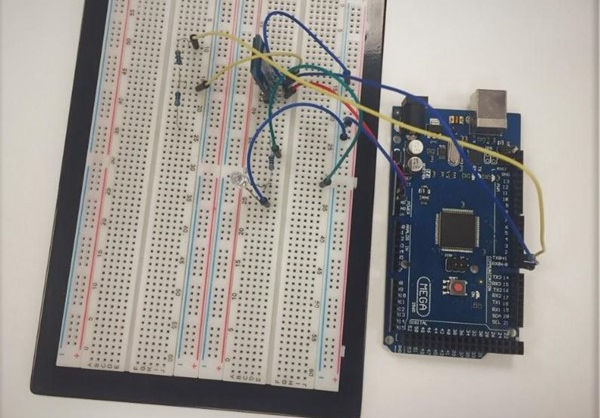

Using Arduino, a prototype was created to act on the interaction of Myo at a domestic level, here, focused only on the automation of a light bulb. This automation, eliminating the usual interaction with a switch, performing it with just a gesture, brings a new level of control and convenience to people's lives. Figure 6 illustrates one of the first prototypes of the integrated system.

Interaction and studies with the Myo device gradually evolved throughout the project. Thus, near the end of the project, it was possible to implement an IoT module in order to exemplify the development capacity practiced, assimilating to a real experiment for the communication of a BCI interaction. In this way, the module conceived and presented in this project served as the basis for a proof of concept elaborated in the discipline of the Information Systems course, which presented greater completeness of functionality in a real scenario.

Presented at the 7th SEMIC (ESPM Scientific Initiation Seminar), the project garnered academic attention for its multidisciplinary synthesis of neuroscience, systems design, signal processing, and human-computer interaction. It also sparked broader dialogue about the ethical potential of BCI systems: how technologies rooted in biological signal interpretation might serve not just function, but freedom.

Ultimately, this project demonstrates more than the technical viability of BCI-based gesture systems. It proves that such systems can be inclusive, scalable, and anchored in human purpose. It reveals a design ethos that values autonomy over novelty, embodiment over abstraction, and access over complexity.

At its heart, this is not just a prototype—it is a statement: that interaction should not be a privilege mediated by tools, but a right restored through intention.

Future Directions

This project is not an endpoint—it is the foundation of a broader research vision. The validation of real-time, non-invasive interaction through EMG signal capture marks only the beginning of what neuroadaptive systems can become. Moving forward, I intend to integrate machine learning to refine gesture recognition and build adaptive models that learn from each user’s neuromuscular profile. These systems could expand to control neuroprosthetics, immersive environments, and multi-modal interfaces—responding to muscle, movement, and cognition simultaneously.

But with capability comes responsibility. As machines begin to interpret the body’s inner signals—its tensions, intentions, and microgestures—the question is no longer just how they respond, but how they should. Interaction design becomes a question of ethics: How do we encode dignity into systems that decode biology? How do we ensure that privacy, consent, and agency are built into technologies that sense and act on human expression? These are not theoretical considerations—they are structural decisions that must be embedded in architecture from the start.

This project has shaped my understanding of innovation not as technical achievement alone, but as a moral act. Signals are not just data—they are expressions of will. Building systems that interpret them requires more than precision; it requires care. I see human-machine interaction not as an efficiency problem, but as a space for reimagining connection. Neuroadaptive systems have the potential not just to respond—but to understand, to adapt, and to honor the person behind the signal.

My commitment is to develop technology that begins with the body and respects its language. I envision systems where interaction starts not with contact, but with consciousness—where control is not imposed, but reclaimed. Through interdisciplinary research, ethical design, and inclusive thinking, I aim to help shape a future where neurotechnology amplifies autonomy, restores capability, and designs with humanity at its core.

Looking ahead, this work opens the door to adaptive neuroprosthetics, haptic control systems, and ethical frameworks for AI signal interpretation. It has also shaped my research philosophy: that the signals we interpret are not just data—they are expressions of autonomy, effort, and identity. Technology, when designed with intention, can restore control to those excluded by conventional systems.